While trying to reach a mutual agreement with any sophisticated agent, we have an initial thought thanks to our experiences from previous negotiation experiences with that agent or similar agents. Then, We take into account opponent preferences about current issues and opponent’s behavior in new interactions. This year at the ANL challenge, we're looking forward to the agents you’ve developed precisely that behavior. The developed agent should be capable of making an opponent model in the current negotiation while learning from previous negotiations. Thus, we aim to provide as much flexibility as possible while. At every negotiation, agents obtain a directory path to save and load anything they want. This state is stored as a file on disk, and the path is passed to the agent every time a negotiation is initiated.

The competition takes place during AAMAS 2023, 29 May-2 June 2023, in London, UK. There will be $600 in prize money for ANL participants. This prize money distribution is provided in the following Table. We will also support a certain number of finalists participating in AAMAS for presenting their agents.

| Ranking | Utility | Social Welfare |

|---|---|---|

| Winner | $200 | $200 |

| Runner-up | $100 | $100 |

A negotiation setup consists of a set of rules (protocol) to abide by, an opponent to negotiate against, and a problem (scenario) to negotiate over. We describe all three components in this section.

The Stacked Alternating Offers Protocol(SAOP) is used as negotiation rules Here, the starting agent has to make an opening offer after which the agents take turns while performing one of 3 actions:

This process is repeated in a turn-taking fashion until reaching an agreement or deadline. If no agree- ment has been reached at the deadline (e.g., 60 seconds), the negotiation fails. Please remember to test and report the performance of your agents at different deadlines. If there is a deal, a score is calculated for both 1 agents based on the utility of the deal at the end of a session. Otherwise, the score equals the reservation value of your preference profile. Each party will obtain a score based on its utility function. Since nodeal has been reached, this is a missed opportunity for both parties.

The scenario consists of a discrete outcome space (or domain) and a preference profile per agent. This preference profile is used as a utility function to map the problem space to a utility value for that agent. Here, 0 and 1 are the worst and best utility, respectively, obtained by the agent. The negotiations are performed under incomplete information, meaning that the utility function of the opponent is unknown.

Entrants to the competition have to develop and submit an autonomous negotiating agent in Python that runs on GeniusWebPython. GeniusWeb is a negotiation platform where you can develop general nego- tiating agents and create negotiation domains and preference profiles. The platform allows you to simulate negotiation sessions and run tournaments. At every negotiation, agents obtain a file path to save anything they want. An agent gets a chance to process all that has been saved for that agent on multiple occasions during the tournament.

A basic example agent that can handle the current challenge can be found on GitHub. We aim to provide a quick-start and FAQ on this GitHub page, which we will improve incrementally based on feedback received via (e.g. Discord).

If the agents reach an agreement, the outcome’s utility is the agent’s score. This utility is usually different for both agents. A utility of 0 is obtained if no agreement is reached. There is two different categories:

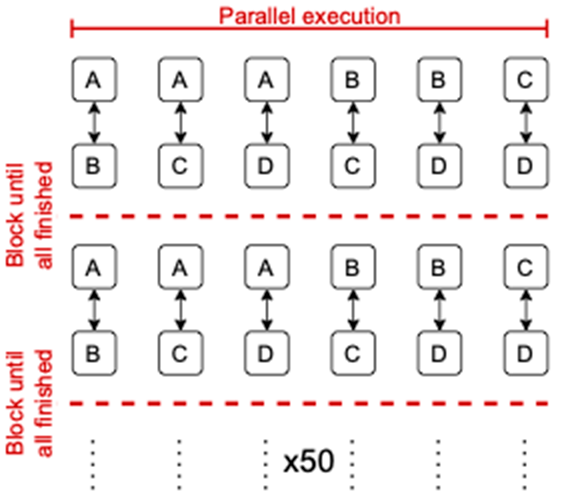

You are allowed to store data in a directory that is provided to your agent. You can use this storage for learning purposes. NOTE: Due to parallelization, your agent will be run against every unique opponent concur- rently. Take this into account when saving and loading data.

The following figure illustrates the execution procedure of the tournament. As already described in the frame above, all agent combinations will negotiate in parallel on a randomly generated negotiation problem. The next round is blocked until all the agents have finished their negotiation session. This allows all agents to know all opposing agents at the start of every following session. A total of 50 negotiation sessions are run.

Participants submit their agent source code and academic report (optionally) in a zipped folder. For the code, include the directory with your agent like the directory of the template agent in the GitHub reposi- tory) in the zipped folder. Ensure your agent works within the supplied environment by running it through ”run.py”. Please submit your application through the following link: Submission Form

Each participant should prepare a 2-4 page report describing the design of their agent according to academic standards. The best teams can give a brief presentation describing their agent depending on the available slots at AAMAS 2023.

Furthermore, the selected agent papers are planned to be published in the proceedings of coming AA- MAS. The organizers of this league will evaluate the report. For eligibility, the design should contribute to the negotiation community. The report should address the following aspects:

You can find the finalist for each league. The ordering does not reflect the actual ranking. Note that the actual ranking will be announced in ANAC session during the AAMAS 2023.

15 submissions