Automated Negotiation League

Automated Negotiating Agents Competition (ANAC2021)

Submission Form

Automated Negotiating Agents Competition (ANAC2021)

Submission Form

Design a negotiation agent for bilateral negotiation that can learn from every previous encounters while the tournament progresses. The agent that obtained the highest average score (utility) wins.

In previous years of ANL, either no learning across negotiation session was allowed, or learning options where limited. e.g. Learning over identically repeated negotiation sessions. This year we aim to provide as much flexibility as possible while safeguarding for unfair play. At every negotiation, agents obtain a file path where they can save anything they want. An agent gets a chance to process all that has been saved into a persistent state for that agent at multiple occasions during the tournament. This state is stored as a file on disk and the path is passed to the agent every time a negotiation is initiated.

The competition takes place during IJCAI 2021, August 2021, in Montreal, Canada. There will be an estimated $5000 (subject to change) in total available for prize money and for student travel grants which will be made available to participants.

A negotiation setup consists of a set of rules (protocol) to abide by, an opponent to negotiate against, and a problem (scenario) to negotiate over. We describe all three components in this section.

The Stacked Alternating Offers Protocol (SOAP) is used as negotiation rules Here, the starting agent has to make an opening offer after which the agents take turns while performing one of 3 actions:

To prevent agents from negotiating indefinitely, a deadline of 60 seconds is set.

The opponents will be agents that are submitted by other competitors in the ANL.

The scenario consist of a discrete outcome space (or domain) and a preference profile per agent. This preference profile is used as a utility function to map the problem space to a utility value for that agent. Here, 0 and 1 are the worst and best utility respectively that can be obtained by the agent. The negotiations are performed under incomplete information, meaning that the utility function of the opponent is unknown.

Entrants to the competition have to develop and submit an autonomous negotiating party in Java that runs on GeniusWeb. GeniusWeb is a negotiation platform in which you can develop general negotiating parties as well as create negotiation domains and preference profiles. The platform allows you to simulate negotiation sessions and run tournaments. A basic example agent that can handle the current challenge can be found on Github. We aim to provide a quick-start and FAQ on this Github page, which we will improve incrementally based on feedback received via e.g. Discord.

If the agents reach an agreement, then the utility of that outcome is the score for an agent. This utility usually different for both agents. A utility of 0 is obtained if no agreement is reached. This can occur for one of three reasons:

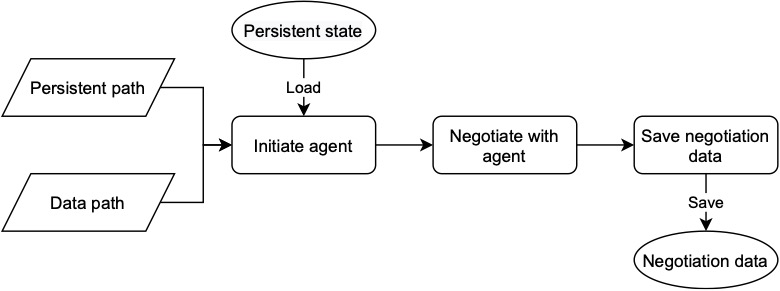

We provide a step-by-step description of the tournament from a single agents (and thus the competitors) perspective illustrating the learning behaviour of the agent. The identity of an opponent is disclosed during this competition, so that your can learn the behaviour of every agent in the competition. There are two separate steps that we consider: Negotiation and learning.

The agent is initiated with session information that contains details on the negotiation setup. It also includes a file path to the persistent state of the agent that serves as the memory of the agent and can be loaded.

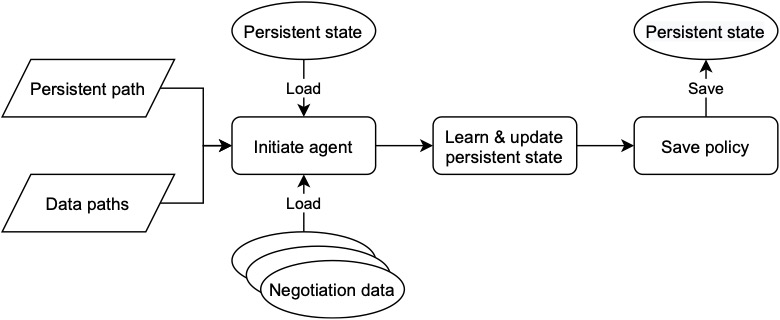

See \autoref{fig:learning}. The agent is again initiated with session information that is needed to perform an update to the persistent state. Most notably, a list of file paths is provided that point to data of past negotiations that have not yet been processed in the persistent state. The file path to the persistent state of the agent is also provided. The agent can now load all the unprocessed data and use it to update its persistent state. For this step a deadline of 60 seconds is set after which the agent is killed.